Introduction

Core "Single Data Record" APIs

Full "Dataset Workload" APIs

Supported Data Sources for Cloud Data Connect

Using Interzoid with Databricks

The Databricks platform provides a cloud-based environment that combines data processing, analytics, and machine learning for datasets. It allows data engineers, data scientists, and analysts to work collaboratively on data projects. The platform is designed to handle large-scale data processing tasks and provides support for various programming languages such as Python, R, Scala, and SQL.

There are two ways to work with and process Databricks tables: through the Cloud Data Connect Wizard, or via an API call.

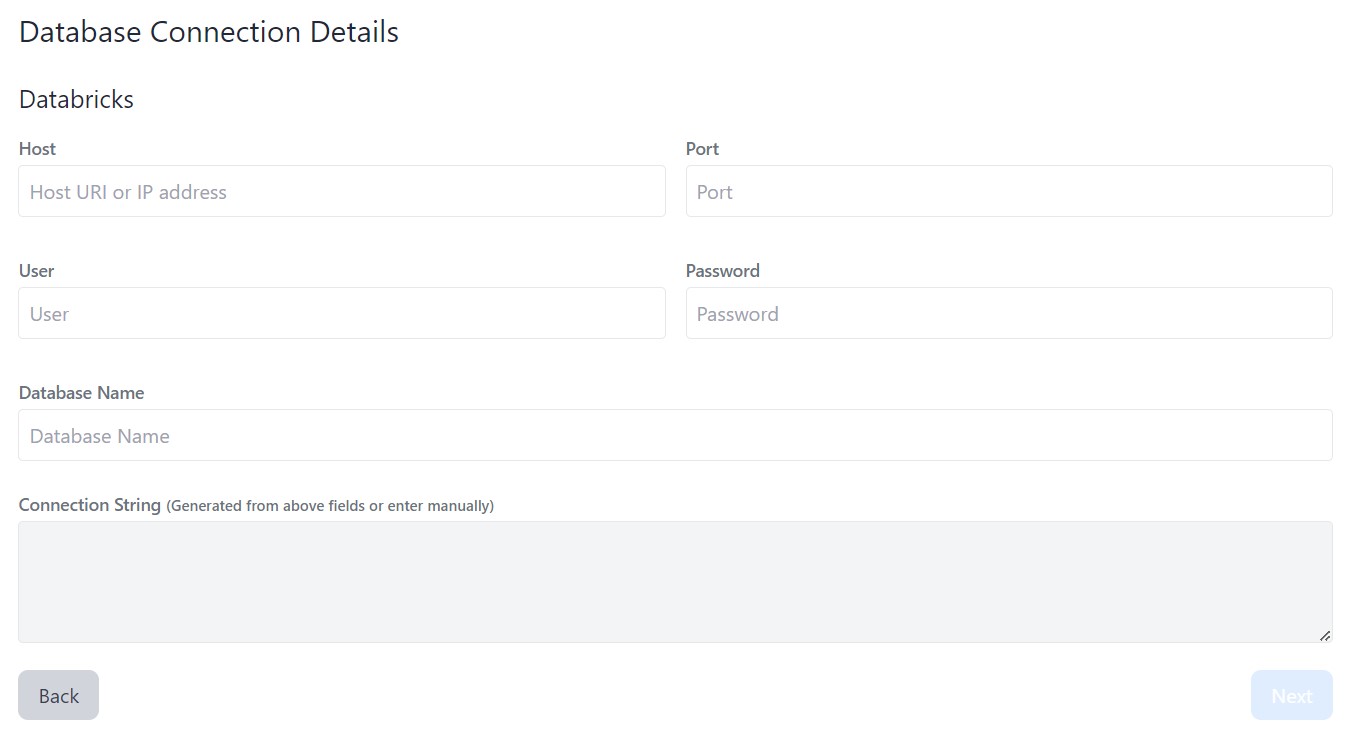

Using the Cloud Data Connect Wizard, you simply connect to an instance of Databricks with a connection string (see below) or providing connection parameters within the form, selecting the database and table you will use as your source. You must also provide the specific column you will be matching on. You need to choose the category of matching you want to perform (company names, individual names, or addresses).

Finally, select the type of matching you want to perform. This can be a match/inconsistency report that shows clusters of similar data, inconsistent, and otherwise matched data. You can also create an output file with a similarity key for every record in the file. You can create a new table that will be created within the source database that will store the similarity keys along with the corresponding value, or you can choose to generate the SQL that allows for the same. Creating a new table to store similarity keys enables you to perform your own custom types of matching using similarity keys as the basis of a join rather than the actual value of the data itself, and also enables matching across tables within your database. This will provide significantly higher match rates than matching on the original data values.

Here is a screen from the Cloud Data Connect Wizard showing a sample configuration. After you select your options, click "Run" and you will shortly have your results.

You can also access a Databricks table programmatically via an API call. Here is an example (place in the URL address bar of your browser and press 'enter'):

https://connect.interzoid.com/run?function=match&apikey=use-your-own-api-key-here&source=databricks&connection=your-specific-connection-string&table=companies&column=company&process=matchreport&category=company

For more details and documentation for the parameters of the API call, visit here.

Connection string example:

// For a cluster

"token:dapi1ab2c34defabc567890123d4efa56789@dbc-a1b2345c-d6e7.cloud.databricks.com:443/sql/protocolv1/o/1234567890123456/1234-567890-abcdefgh"

// For a SQL warehouse

"token:dapi1ab2c34defabc567890123d4efa56789@dbc-a1b2345c-d6e7.cloud.databricks.com:443/sql/1.0/endpoints/a1b234c5678901d2"

Supported optional connection parameters can be specified in param=value and include:

catalog: Sets the initial catalog name in the session.

schema: Sets the initial schema name in the session.

maxRows: Sets up the maximum number of rows fetched per request. The default is 10000.

timeout: Adds the timeout (in seconds) for the server query execution. The default is no timeout.

userAgentEntry: Used to identify partners. For more information, see your partner’s documentation.

Supported optional session parameters can be specified in param=value and include:

ansi_mode: A Boolean string. true for session statements to adhere to rules specified by the ANSI SQL specification. The system default is false.

timezone: A string, for example America/Los_Angeles. Sets the timezone of the session. The system default is UTC.

A "connection string" provides the parameters necessary to initiate the connection to a specific data

source on the Cloud for

analysis, enrichment, matching, or whatever data function from Interzoid is selected for use.

A connection string enables connecting to a data source using the Interzoid Cloud Data Connect product.

For additional information performing data matching, match reports, and the ability to match otherwise-inconsistent data in Databricks from within a Notebook utilizing DataFrames and a simple Python function (including the free Databricks Community Edition), see here.